Validating your SAS system in the Cloud

Note: The following article reflects my personal point of view regarding validating your SAS system in the Cloud.

Cloud computing, a highly dynamic and expandable development in the way people use computers. At run-time it is possible to have additional computing power and resources added to your system, based on a guaranteed up-to-date operating system. These systems are becoming more prevalent, and the latest versions of SAS Viya (Viya 4) are aimed at the Cloud.

To avoid confusion, let’s start with a few definitions concerning Cloud Computing and Validation:

- Cloud Provider (CP): The vendor that is supplying your Cloud Computing Services. It might be a Public Cloud, hosted by Microsoft, Amazon or some other CP. It might be a Private Cloud, hosted either by your own IT department or a trusted secure vendor. It might be a Hybrid system using bits of both;

- Cloud Computing: Using IT systems over a network (usually the internet);

- Virtual Machine: A part of a much larger physical host machine that is dedicated just for your use as if it were an independent self standing system. VM’s can be dynamically sized and run a different O/S than the host uses. Additional resources (more CPU’s, Memory, Storage, etc.) can be added without having to stop the system.

- Verification: Finding out what really happens – the truth about your system;

- Qualification: Checking if the Verification is what you expect it to be;

- Validation: Collaborating all this to make sure that you meet your Requirements;

- SLA’s: Service Level Agreements, the committed standard of service that will be supplied;

The CP looks after the hardware, the virtual machines, the O/S and security patching, the availability, the storage, etc. They make regular and dynamic updates and changes to these without touching your Change Control systems. Plus the Cloud VM you are using today is not the one you validated on day-1 or the one you validated yesterday.

You are using systems that are not been locked down like they used to be. Plus you do not have any control here – not what you are used to with Validated systems.

You get round some of these issues by getting SLAs from your CP ensuring all their changes follow their own Change Control procedures. Document these SLA’s as part of your initial Validation bundle. This also means that some of the things you previously had to validate (backups, access, O/S patching, etc) are no longer your direct concern. Now these CPs are large professional organisations. They will have their own rigid procedures and checks. This is where you have to put some trust into their SLA’s and let them do what they do best – maintain up to date reliable IT systems.

Next problem: Your parts of the Clinical IT system must be Validated.

A traditional approach to validating Clinical Systems is:

- Spend weeks or months running verifications through the system;

- Qualify the results;

- Lock down the systems;

- Validate by checking the Qualifications and the Documentation to ensure the Requirements are met;

- Put strict Change Control and SOP’s in place to regulate the system;

- Only allow modifications that adhere to your strict Change Control policies;

You will have difficulty doing this in a Cloud environment. What you need is a new validation toolset and a different approach.

The solution: Continuous Validation

Continuous validation is providing documented evidence (the qualified output from your verification checks) that a cloud service not only met the acceptance criteria on day zero, but that it continues to meet these today.

It mitigates the risk of changes in the CP’s sphere of influence affecting the behaviour of your Cloud Application.

Suggestions on doing this in SAS:

- Change your thinking straight away – you need a new toolset for the validation – no paper or manual testing involved;

- Change from having a Paperwork defined Validation process to having a Software defined process. What you used to do, locked down systems, etc, no longer applies. You now need continuous Smoke & Regression testing;

- Breakdown your processes into simple steps, covering the full range of your requirements;

- Develop (SAS Studio, SAS-EG, SAS-DI) small SAS Jobs that test just one or two steps at a time. These become the Verification steps.

EG: Check the initialisation code (put ‘old’ files & data in place, ensure they are removed, ensure variables set, etc);

Check for input files (put your low volume input files in place and check they are accessible and usable);

Check database access (open a known table, count the entries, return True if >0);

etc, etc; - By definition your checks will also test parts of the underlying services (Is SAS running? Is the network connection up?). You don’t need to specifically check these services if other checks are using them anyway;

- Include “Error condition” Verification steps, to make find out what your system does when it encounters a problem (missing files or data, unavailable database table, etc};

- Build comparison tests into the end of each Job, so that you automatically check the Jobs are successful and that errors are handled correctly. These become the Qualification steps;

- Be sure that your comparison tests handle acceptable differences, such as <date>/<time> fields changing, or sort orders being dynamically different – for instance if your time stamped files that normally come back in the order A-B-C come back as C-A-B because this time file C was ready before the others;

- Make all these Jobs low volume and fast. You need realistic data, but only sufficient records to test the system rather than stress it;

- You also need the data set to be large enough to cover a range of possibilities. If some procedure within your system has 10 parameters, and different <null> values in various fields lead to different results, then test some (the most likely) of the combination possibilities. This might mean that you need 10 or 20 (or more) short quick tests, all of which should qualify as a pass, for this one procedure.

- Have one Validation Flow, or a series of Sub-Flows, to execute all these Jobs in the correct order;

- Decide when you will schedule your Validation Flow(s) {Daily, Weekly, Weekdays only, on start-up – your decision);

- When you make any Change Management controlled modifications to your parts of the system make sure any affected verification checks are updated;

- When new Requirements arrive make sure you develop the code and Jobs for new auto-tests – Basically doing your own CI/CD on the CI/CD pipeline;

- Disadvantage of all this: It takes time to set up and get working;

- Advantage of all this: Once working it makes future Validation a lot easier, quicker and it is automated;

A bit more detail:

As pointed out earlier, plan, plan, plan.

Start with your User Requirements (functional, non-functional, regulatory, performance, security, logging, disaster recovery, interface, etc). What is the intended use of your Cloud application?

Work out which features you should test. Keep these simple!! Decide how much ‘negative testing’ you should do. For example: missing input files; incorrectly formatted files; empty files; files with ‘the wrong content’; bad or incorrect pathnames; insufficient access rights; checking logs; checking each interface; workflow; configuration;

By keeping these short, simple and fast you make code re-use possible. Use parameters if required. For example, if you have a test to see if you can open an input file, then parameterize the test to support opening the passed <pathname> argument rather than having a hard-coded pathname.

Develop functional specifications for these tests; Have each test Verify itself against its expected result. From this have each test return a simple Pass/Fail result;

Code these tests in small and fast SAS Jobs. If you are continuously validating a large system you might have hundreds of small tests to run.

Test each and every SAS Job.

Automate these tests by wrapping them in one or more SAS Flows. Get the parallel processing and dependencies correct in these flows so that tests don’t fail because you are trying to perform step X when step X-1 has not yet completed.

Have a SAS Reporting Job that runs at the end of the SAS Flow. Get this to produce the Validation Report, the KPI’s and the simple Pass/Fail result that can be placed in your dashboard.

The intention is that by automation, parallel processing and fast tests you will be able to run several hundred tests in a very short time period, ideally less than one minute.

Achieving this in the real world:

In the real world there is no single “Best Answer” to validating this sort of a SAS system.

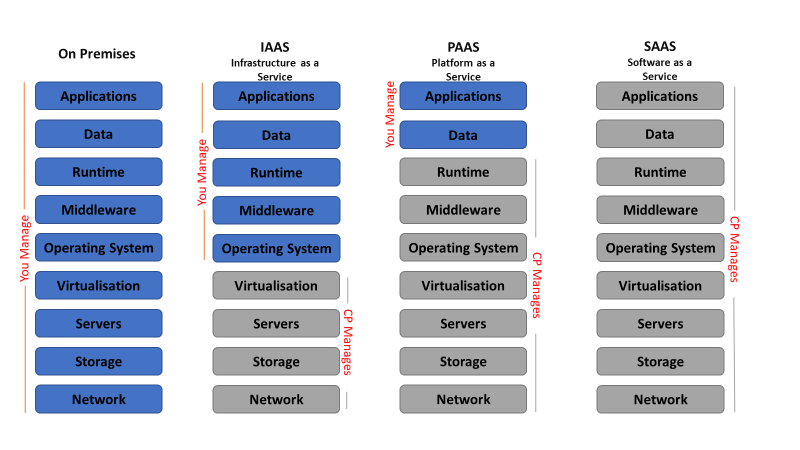

Every customer and every instance will have some variation that raises challenges. The range of tests will also vary depending on quite where you sit on the IaaS / PaaS / SaaS spectrum.

This is where you need to plan your way to an acceptable solution. Start with a Requirements Specification for your Validation system. Have Functional Specifications, Design Specifications, Technical Specifications, Coding Specifications, Testing Specifications, etc. A small amount of ‘upstream’ planning can save you a lot of ‘downstream’ effort.

- Start with your User Requirements (functional, non-functional, regulatory, performance, security, logging, disaster recovery, interface, etc). What is the intended use of your Cloud application?

- Break down your requirements into simple steps.

This is where you work out the high level details of what your system needs to do.

You might end up with hundreds of small tests here. Examples are:- Reading input files;

- Accessing the Database System;

- Setting initialisation variables;

- Intermediate files appearing as they should;

- Error conditions (negative testing) being trapped and reported;

- The outputs being mathematically correct;

- The final reports being as expected;

- The end of run clean-up;

- Plan out the Verification required for each step.

This is where you find out what actually happens on <this> run of <this> step.

Keep these steps as simple and as self standing as possible. This makes code re-use possible and you can port these simple steps to other systems. If you parameterize your code (eg. Open the passed pathname rather than a hard-coded pathname) then you make code re-use easier.

Examples are:- What happens if I try reading in the input files;

- What happens when I access the Database System;

- What initialisation variables are set;

- Which Intermediate files appear as the system processes data;

- Are Error conditions being trapped and how is this reported. Errors tests should check for realistic events such as: missing input files; incorrectly formatted files; empty files; files with ‘the wrong content’; bad or incorrect pathnames; insufficient access rights; checking logs; checking each interface; workflow dependencies; configuration errors, etc;

- What are the (mathematical) outputs;

- Where is the final report and what is its contents;

- What happens in the end of run clean-up;

- Plan out the Qualification checks needed at the end of each step.

This is where you see if the Verification produced what you expected.

Examples are;- Were the input files readable and as expected;

- Could I access the Database System as expected;

- Were all the initialisation variables correctly set;

- Did the Intermediate files appear as expected and was their content correct;

- Were the Error conditions trapped and reported correctly;

- Are the (mathematical) outputs as expected;

- Is the final report and its contents as expected;

- Did the end of run clean-up produce its expected outcomes;

- Have each Qualification return a simple Pass/Fail result.

In the case of expected errors, get your logic correct {Yes, this test produced a failure, but it is exactly the failure that we expected. If we’d encountered any other error then this Fail would be a Fail, but because we got the error we expected then this Fail is actually a Pass.}; - Automate these tests by wrapping them in one or more SAS Flows. Get the parallel processing and dependencies correct in these flows so that tests don’t fail because you are trying to perform step X when step X-1 has not yet completed;

- Get a low volume but realistic data set that can be used for these tests;

- Have a SAS Reporting Job that runs at the end of the SAS Flow. Get this to produce the Validation Report, the KPI’s and the simple Pass/Fail result that can be placed in your dashboard. The system is Validated if all the Qualifications produce Pass results;

- The intention is that by automation, parallel processing and fast tests you will be able to run several hundred tests in less than one minute. But to start with have this sort of a run time as a target rather than a guaranteed performance level;

Also be aware that you might have to give SAS X-Command permissions to the Test Account User ID. Some of the activities (putting files/data in place, checking they are accessible or cleaned-up, etc) are done more easily at Operating System level rather than in code. This might go against your existing policies of not allowing X-Commands. If so then get your Compliancy people to grant an exception for just the Test Account User ID. The choice might be between spending months coding round things, with the inevitable delays and cost to your company – or the Compliancy people realising that this is an exception and they need to accept it.

Don’t make life difficult for yourself here.

The alternatives:

Yes, there really are alternatives here… but you might not like them:

- Stay on your old systems and fall behind the times;

- Give up on Computers altogether and go back to pen & paper;

- Never validate your systems after day-one;

- Have Compliancy block the X-Command exception and cost your company tens of thousands in additional costs

You might not have any choice here, you will need to address this challenge somehow.

My recommendation: Continuous Automated Validation.